This article originally appeared in The Bar Examiner print edition, Fall 2018 (Vol. 87, No. 3), pp 3–6.

By Judith A. Gundersen

As I wrap up my first year as NCBE president, I’ve spent a lot of time reflecting on the successes and challenges of the past year as well as contemplating new initiatives and possibilities for the upcoming year.

As I wrap up my first year as NCBE president, I’ve spent a lot of time reflecting on the successes and challenges of the past year as well as contemplating new initiatives and possibilities for the upcoming year.

In terms of successes, we are very pleased with the progress made thus far by the Testing Task Force. We appointed the Task Force at the beginning of this year to undertake a three-year study to ensure that the bar examination continues to test the knowledge, skills, and abilities required for competent entry-level practice in the 21st century. In September we had meetings with our independent research consultants to commence the first phases of the study. We are committed to devoting significant resources to this study to ensure that it is comprehensive and empirical, and that stakeholder input is obtained.

We are also well into the process of working to transition the MPRE from a paper-based testing format to a computer-based testing (CBT) format. Implementation won’t be fully realized until 2020, but once the CBT format is fully implemented, examinees will benefit from testing under uniform conditions. Test development, examinee performance analysis, and security will benefit, too, as moving to CBT allows us to pretest more items (all MPRE questions are pretested as unscored items to evaluate their performance for use on a future exam), analyze exam performance data in a more comprehensive manner, and quickly identify cheating (although we think opportunities for cheating will decrease with CBT).

Our Investigations Department launched its new online character report application in March, which greatly modernized and improved the applicant and jurisdiction experience. This achievement was the culmination of nearly two years of planning and work by our IT and Investigations Departments. If you are using the new online application in your jurisdiction, we’d love your feedback.

Another success from the past year is the growth of the Uniform Bar Examination and its positive implications for the mobility of new lawyers. As of the July 2018 exam, over 100,000 examinees have earned a UBE score since the first administration of the UBE in February 2011. We anticipate that approximately 14,000 UBE examinees will have transferred a UBE score by the end of the year. (We don’t yet have July figures to add to the current total of transferred UBE scores.) And we are now at 34 UBE jurisdictions: the latest jurisdiction to adopt the UBE is Ohio, which will administer its first UBE in July 2020. Welcome aboard, Ohio.

To be sure, there have been challenges in the past year, too. Low bar pass rates and record-low scores dominate this category for me and the NCBE staff. Failing the bar exam is a devastating experience for examinees, and no one—not legal educators, bar examiners, courts, bar associations, legal employers, the public, or NCBE—likes to see falling MBE mean scores and pass rates. As stakeholders in the law student–to–lawyer pipeline, we all like to see that pipeline flowing and see a new generation of lawyers serving the public. We are mindful, though, about the role that we play as regulators of the profession. That role is rooted in protecting the public—and doing so in an objective and fair way for applicants. So, notwithstanding our distress over low pass rates, we respect bar exam results as the product of exam development, administration, quality control, and scoring protocols that are thoroughly vetted and implemented according to high-stakes test measurement standards.

There are those who say that the reason for declining scores is that the bar exam has gotten harder; that claim is false. The statistical process of equating ensures that variability in difficulty from one test form to another is accounted for by translating raw scores to scaled scores (an important aspect of standardizing a standardized test) to compensate for this variability. For example, if the questions on any given test form are harder than those on a previous test form, then examinees will have to answer fewer questions correctly (i.e., obtain a lower raw score) to get the same scaled score as that obtained on an easier version of the exam (where examinees would have to answer more questions correctly).1 Equating ensures that a reported scaled score has a consistent meaning across test administrations.

As discouraging as the July 2018 MBE results may be (the national average MBE score for July 2018 was 139.5, a decrease of about 2.2 points from the July 2017 average), we have confidence in them. (As I write this column, some jurisdictions are still in the process of grading the written portion of their exams, so ultimate pass/fail results are not yet known.) Our equating process and results for the July 2018 MBE were independently checked and replicated by the Center for Advanced Studies in Measurement and Assessment (CASMA), one of the premier psychometric groups in the world—a step we first implemented with the July 2014 examination and have continued to employ since then. CASMA recently completed its independent equating process for the July 2018 exam and reached the same score results as we did.

That is cold comfort for law schools and examinees. Unlike typical recent February results (where likely repeat test takers, whose performance lags significantly below that of first-time takers, comprise approximately 70% of all examinees), there is no single source that clearly stands out as the driving force behind the low scores in July 2018. Performance was down for examinees in all categories (see this issue’s Testing Column, in which Dr. Mark Albanese presents data on the performance of the July 2018 examinees by first-timer and repeater status). What accounts for this level of performance? As Dr. Albanese notes in his column, it would be ideal to have a complete record of each examinee’s academic performance to get at the root of the decline in scores, but we do not have this information. There is a lot we don’t know about individual examinees, including where they went to law school, what their grades were, what courses they took, whether they had to work while studying for the bar exam, and so on. We also don’t know individual examinees’ LSAT scores. This is the kind of information that would help us better understand what’s behind these examinees’ performance.

The 25th percentile LSAT score provides an important indicator of future bar exam performance. According to Law School Transparency, a nonprofit organization that provides information and analysis on legal education issues, for the class entering law school in 2015, which composed the majority of the July 2018 exam takers, 39.1% of law schools had 25% or more of their class with LSAT scores below 150, which put these examinees at a “high” risk (or “very high” or “extreme” risk, depending on how far below 150 an examinee’s score fell) of not passing the bar exam. (For the class entering law school in 2014, that percentage was 38.3.)2

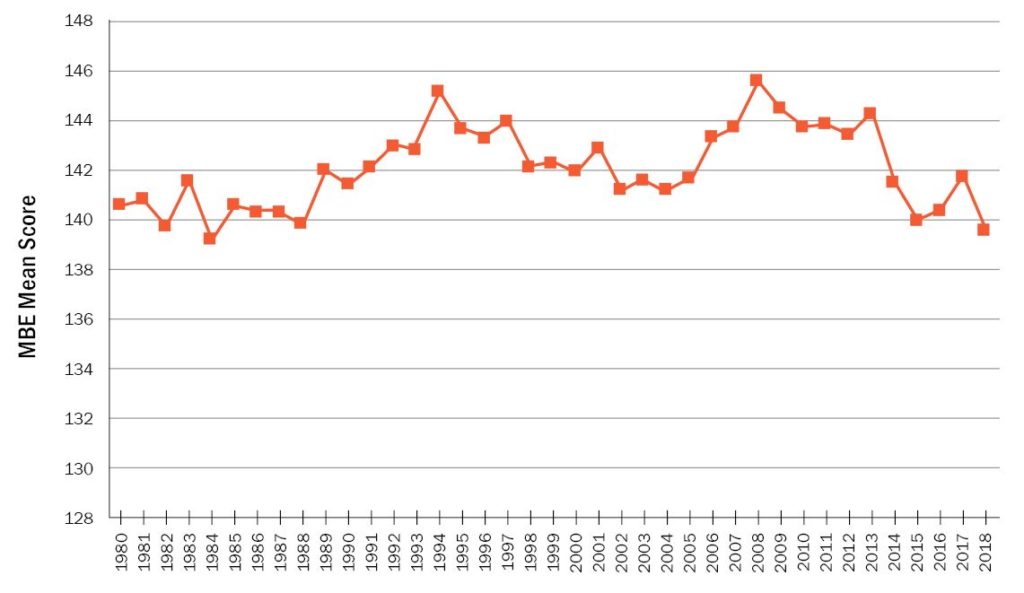

So maybe the results aren’t surprising, but they certainly are disappointing. A look at long-term July MBE mean scores, as illustrated in the following figure, shows sustained trends but also fluctuations from one July to the next over the past 38 years. This offers perspective and context, perhaps, if not comfort.

July MBE Mean Scores, 1980–2018

Disappointment can fuel action, though, which is a nice segue to announcing some of NCBE’s initiatives for the coming year. I’m delighted and proud to note that NCBE is poised to collaborate with another stakeholder in the legal education and admissions pipeline to enhance diversity in the profession by helping with bar readiness for disadvantaged groups. While we are not yet able to write about the details of this collaborative effort, an announcement will be made by year’s end. We believe that it is important for all examinees, not just those who have the financial means, to have access to bar preparation materials to promote readiness. Another measure we are undertaking is an overhaul of our study aids to make them more personalized, engaging, and effective for bar preparation. More information on this initiative will also be announced soon. Finally, NCBE is actively exploring other strategic alliances aimed at improving our ability to conduct and publish bar exam–related research and to educate all in the legal education and admissions community about measurement principles that are so important to a fair, valid, and reliable exam.

NCBE’s commitment to providing high-quality educational opportunities for admissions authorities’ staff members, courts, and bar examiners remains high. This fall, for example, we offered training to new bar examiners and administrators at our Best Practices in Testing mini-conference. Over 70 people attended the mini-conference to learn about test measurement, development, administration, grading, security, and ADA testing accommodations. We have been asked by several law faculty members about providing an academic support conference in the upcoming year, and I am pleased to say that our Education Committee is working on deciding how to best sponsor such an event.

Challenges, rewards, opportunities. I’m excited about the possibilities that lie ahead. Here’s to the upcoming year!

Finally, I would like to acknowledge the change in leadership on the NCBE Board of Trustees. I must give Justice Rebecca White Berch my most sincere thanks for her leadership at NCBE during this past year of transition. She was infinitely patient, calm, and supportive. She opened doors for me to the admissions world outside 302 South Bedford Street and paved the way for me to do my best to represent NCBE. I am very grateful to have had her wise counsel and support. She will continue to serve on the Board as immediate past chair for the coming year.

Our 2018–19 chair is Michele Gavagni, the executive director of the Florida Board of Bar Examiners. Missy, as we know her, brings a wealth of experience to the role of chair. I am always struck by her level of preparation and by the insightful questions she poses that reflect her experience and thorough knowledge of all things bar admissions–related. I am looking forward to working with Missy in the upcoming year.

I wish to welcome Augustin Rivera, Jr., of the Texas Board of Law Examiners as the newest member of our Board. Augie, as we know him, has served on two of our test policy committees and has a breadth of experience as a lawyer and as a volunteer on the Texas Board. We are excited to have Augie join us.

Any transition in Board leadership means that someone leaves the Board, and this time we are saying aloha to Bobby Chong of Hawaii. Bobby has served on our Board for nine years. We have been enriched by his generosity of spirit, his love of Hawaii that he has shared with us, and his passion for public service in admissions in Hawaii and on the national scene.

Until the next issue,

Judith A. Gundersen

Notes

-

- This raw-to-scaled score conversion has been known to cause confusion, as evidenced by what occurred with the June 2018 SAT; see Scott Jaschik, “An ‘Easy’ SAT and Terrible Scores,” Inside Higher Ed, July 12, 2018, available at www.insidehighered.com/admissions/article/2018/07/12/surprisingly-low-scores-mathematics-sat-stun-and-anger-students. (Go back)

- Law School Transparency Data Dashboard, Law School Enrollment, https://data.lawschooltransparency.com/enrollment/admissions-standard/ (last visited Oct. 8, 2018). (Go back)

Contact us to request a pdf file of the original article as it appeared in the print edition.